Flags are flooding our streets in Australia. There have been, and continue to be, many world conflicts. However, over the past two years, the Israel-Gaza conflict has dominated the news and social media platforms, especially TikTok, the current news site for young adults. TikTok has developed a platform for creativity and learning, while also fostering an environment where individuals can express their opinions on a topic. Yet, TikTok’s algorithm has a dark side which is having a dangerous impact, especially on topics related to world conflicts. As a result, young people are trapped, knowingly or unknowingly, in a political echo chamber. The algorithm amplifies partisanship, and we are all victims of it.

TikTok’s algorithm results in Australian young adults staying in a political echo chamber, and in recent times, this can be seen in regarding to the Israel-Gaza conflict. The political echo chambers produced from TikTok’s algorithm amplify strong bias and limit exposure to other perspectives/bias. As a result, they shape what Australian young adults believe and communicate about global conflicts, such as the political situation of the Israel-Gaza conflict.

Algorithmic Personalisation.

Users feel TikTok knows them a little too well for comfort, and there have been theories on the social media platform that the app is listening to the user’s private life. However, it’s simply TikTok’s algorithm personalising the “For You Page” based on prior engagement. TikTok’s algorithm has been viewed as awe-inspiring advanced technology and a profound mystery; however, there is no mystery. TikTok’s algorithm uses data when new users set up their accounts by utilising information about their age, sex, and whom they already follow etc (The Wall Street Journal, 2021b). The algorithm can determine what appears on the “For You Page,” also known as ‘FYP’, and the order in which they appear with tools such as likes, saves, comments, follows, and the duration of a user’s watch time on a particular video (Lang, 2025). The algorithm will, now and then, introduce a new page or type of content on a user’s FYP so it can understand their preferences in more detail and tailor meticulously to those preferences (Lang, 2025).

Though TikTok’s algorithm is no mystery, the app has gained interest and curiosity due to how proficient it is at reading users’ preferences and steering them in the right direction to one of its many “sides,” while also revealing one’s desires, even secret desires to themselves (Smith, 2021). In a New York Times article (2021), Mr Chaslot, who researched TikTok’s algorithm, commented on how “crazy” it is for TikTok to accumulate data on kids and have power to direct their lives. This is made possible as the algorithm is able to detect an individual’s interests and gain sensitive information, bringing a high risk of exploitation. This could lead to micro-targeting and addiction to the platform (Smith, 2021).

TikTok’s goal is for users to stay on the app for as long as possible, and the TikTok document, referred to in the Times article, confirms this claim as well as the beliefs by some analysts that the algorithm recommendations pose a social threat (Smith, 2021). “That process can sometimes lead young viewers down dangerous rabbit holes.” (Smith, 2021).

Research shows algorithmic recommendation systems amplify pre-existing biases and interests (Jacobsen, 2019b). Australian young adults engaging with only one side of the Israel–Gaza conflict are increasingly shown similar partisan content, which inevitably narrows their worldview on the issue and discourages broader understanding and critical thinking.

Political Communication and Polarisation.

TikTok’s algorithm creates political echo chambers, resulting in strong biases and poor communication. Echo chambers foster polarisation and determine how young adults communicate on political issues. TikTok not only informs but also fuels polarised discussions online and in real time among Australian young adults. Echo chambers reinforce users’ beliefs through repeated interaction with like-minded peers and sources: “Selective exposure and confirmation bias.” (Cinelli et al., 2021b). Users tend to seek out their pre-existing opinions on an issue, and form connections with like-minded people (Cinelli et al., 2021b). TikTok’s algorithm and the way it collects data shapes what content the user views and how they interact with it. This reinforces selective exposure and contributes to political echo chambers on TikTok (Li et al., 2025). Studies highlight how echo chambers reduce exposure to opposing views and foster hostility (Cinelli et al., 2021b). Political echo chambers are a prominent aspect within TikTok due to the number of young adults receiving the majority of their news from the platform. The amount of people using TikTok as their source of news has doubled since 2020 in the USA (Tomasik & Matsa, 2025). They are predominantly young in age with over two thirds of users are under thirty-four years of age (Meltwater, nd). The algorithm tailors content based on user behaviour, often introducing biases and limiting exposure to diverse viewpoints (Bartley et al., 2021).

With TikTok’s algorithm and people’s existing ideologies, it shapes the political viewpoint of the young adults users. However, there is another element in force: biases within the platform’s recommendation system (Li et al., 2025). TikTok biases are shown to not only stem from a user’s convictions, followers, and their personal data, but also from the paid advertisement placed on the platform by specific companies, and the recommendations system TikTok controls for the algorithm.

In October 2023, moderators of TikTok content were instructed to allow certain Hamas and Hezbollah related content on the app as long as the content did not incite violence or praise of Hamas (Farah, 2023). However, this did not last long. Although Hamas is known as a violent terrorist group, the TikTok protocols (guidance) eventually allowed “praise of Hamas or Hezbollah only in the context of discussion that focuses on their administrative, political, and social welfare body without mention of their violent acts.” (Farah, 2023). Additionally, the protocols gave permission to allow Hamas and Hezbollah flags in the platform’s content (Farah, 2023).

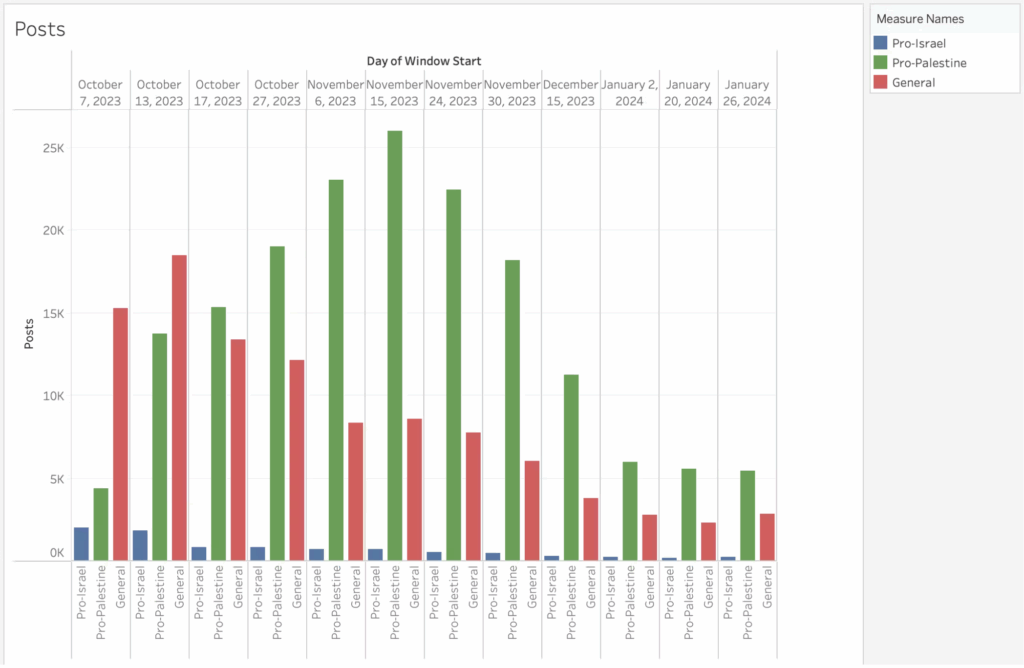

For months, the leading content was influencers using TikTok challenges to increase pro-Palestine support (Scott et al,. 2024). Approximately twenty times more pro-Palestine content was produced over the four months following October 7th (Edelson, 2024).

TikTok ads also dictate the user’s algorithm and their global, political perceptions. The interview from Forbes Breaking News (2024), explains how TikTok’s ad policies denied war-related ads until October 7th. At that crucial turning point within TikTok’s ad policies, the company showed bias as the company rejected Israeli ads showing Israeli hostages; however, at the same time, TikTok accepted “ads from humanitarian aid groups seeking donations that showed destruction in Gaza.” (Maheshwari, 2024). A major issue was the lack of policy information the app disclosed, especially regarding advertising, content boundaries, and the control advertisers have over what content is displayed on the app (Forbes Breaking News, 2024). TikTok denied Israeli hostage ads but approved the graphic imagery of Pro-Palestinian ads, thereby showing inconsistency between its written and applied ad policies (Forbes Breaking News, 2024). “Mr. Herscowitz said he was also concerned that TikTok applied its ad policies inconsistently.” (Maheshwari, 2024). Since 2024, TikTok has adjusted its policy to permit war-related ads and this has enabled humanitarian campaigns provided they strictly display only victims of war. This has resulted in groups like (Missing Families Forum) being granted two ads on the platform, (Forbes Breaking News, 2024). However, at the critical time, immediately following October 7th attack, the majority of content and ads shown were pro-Palestinian, which has determined the algorithm’s content and users’ bias through one-sided communication, (Forbes Breaking News, 2024). This reveals how a powerful company can have a bias towards the conflict which then impacts the consumer.

TikTok has changed the platform since the commencement of the Israeli-Palestine war, and as a result it is harder for users to see and know what other users have as content (Forbes Breaking News, 2024). It has been shown across several different studies that the algorithm is powerful and perhaps even dangerous in how it can shape young users’ minds, especially their thought patterns on political issues, as well as manipulate the news itself (Notley, Dezuanni, & Park, 2023).

Australian Home Affairs Minister, Ms O’Neil spoke on the issue with echo chambers and the dangerous influence it has on Australian citizens. “Democracy is too precious, and important” to let companies “dictate terms to Government, or to our citizens” (Nightly, 2024). The political echo chambers online have an effect offline as seen with campus protests (Spring, 2023). “University campus protests have erupted on both sides of the Atlantic.” (Scott et al., 2024).

TikTok was originally a place for freedom of speech; however, users are discovering that their freedom on the platform is censored and controlled by the platform (Farah, 2023). Algorithm censorship affects how users communicate online and in real time, as well as “significantly affect(ing) freedom of expression, access to information, and public discourse.” (Mohydin, 2023). Governments can censor social media content. This can be seen in Israel’s amendment to their Counterterrorism Law (Article 24) in November 2023, (Adalah, 2023), that notes any publisher or viewer that is supportive of a terrorist organisation, such as ISIS or Hamas, will be prosecuted with up to two years of imprisonment (Mohydin, 2023). Some view this as an infringement on freedom of speech and a muzzle on legitimate political expression (Mohydin, 2023). And yet we can see pro-Palestinian supporters evading the algorithm’s censors through algospeak (Kreuz, 2023), which is the use of different words or emojis to describe another word or reference (Mohydin, 2023). Equality within the algorithm is crucial for effective and inclusive communication: “Certain voices or communities may be disproportionately affected, limiting their ability to participate in the online discourse surrounding the conflict.” (Mohydin, 2023).

How Echo Chambers shape debate in Australia.

Algorithmic echo chambers can distort public debate and weaken democratic communication (Pratap & Pathak, 2025). Research shows that echo chambers reduce critical engagement and increase partisan mobilisation (Sunstein, 2017). TikTok’s algorithm feeds off highly engaging, emotional and extreme content so as to profit from better user engagement outcomes (Stark et al., 2020). The algorithm’s recommendation system amplifies misinformation and lacks communication regarding political matters (Munir, 2025). “Echo chambers further polarise people, making effective communication impossible” (Munir, 2025).

Users search for content that already agrees with their ideologies and actively ignore opposing views or information that contradicts their beliefs. This results in echo chambers that are comfortable and create a society with a poor critical evaluation mindset (Munir, 2025). Moreover, journalists are now unable to strictly filter what circulates, which leads to “a disrupted public sphere in which disinformation narratives are able to circulate freely” (Stark et al., 2020).

TikTok has become a place of selective communication that leads to “isolated and polarized subgroups” (Pratap & Pathak, 2025). The echo chambers and isolation within political beliefs have also been shown in the work environment of TikTok’s company. Since the attack on October 7th, several Jewish employees of TikTok have felt isolated as well as dissatisfied with the company’s handling of in-house criticism of Israel and the dialogue around the war (Maheshwari, 2024). Jewish employees have faced insensitive communication with other colleagues, as well as with internal work profiles showcasing Palestine flags and nationalist slogans that many feel represent Israel’s annihilation (Maheshwari, 2024). TikTok’s employees have online internal work support group chats, which have segregated the Israeli and pro-Palestine employees (Maheshwari, 2024); this is an example of selective communication and biases in real time by the people who work for the platform.

For the young adults in Australia, TikTok echo chambers risk narrowing perspectives on complex global conflicts like Israel–Gaza. This can limit constructive debate and undermine broader consideration of issues that rely on exposure to diverse viewpoints. The echo chambers and polarised political thinking, fuelled by social media is impacting Australian society. “These perceptions are fostered online and can translate into the way Australians view and treat each other in real life.” (Carland, 2024). The echo chambers in regard to the Israel-Gaza conflict is creating division in Australia. “The “echo chambers” of social media are fuelling a rise in antisemitism and Islamophobia that is tearing at the social fabric of the country” (Nightly, 2024).

The echo chambers are fuelling hatred towards the opposing side and is seen through the violent protests within Australia (Nightly, 2024). Ms O’Neil refers to hostility toward Jewish Labor MP Josh Burns who’s office was vandalised and set on fire, as well as how protests were “preventing vulnerable people from accessing government services” (Nightly, 2024)

TikTok’s algorithm creates political echo chambers, and this is evident in the strong bias and deficient political understanding on the Israel-Gaza conflict by Australian young adults. TikTok’s algorithm shapes how the Australian youth engage with political communication and critical debate. Understanding TikTok’s algorithmic influence is key to fostering healthier online communication and better critical engagement towards political issues. TikTok is having significant influence on how our country dialogues on important issues. Civil debate with the freedom of expressing opposing views without penalty is being threatened. The online echo chamber is reverberating throughout all aspects of society and shaping the future.