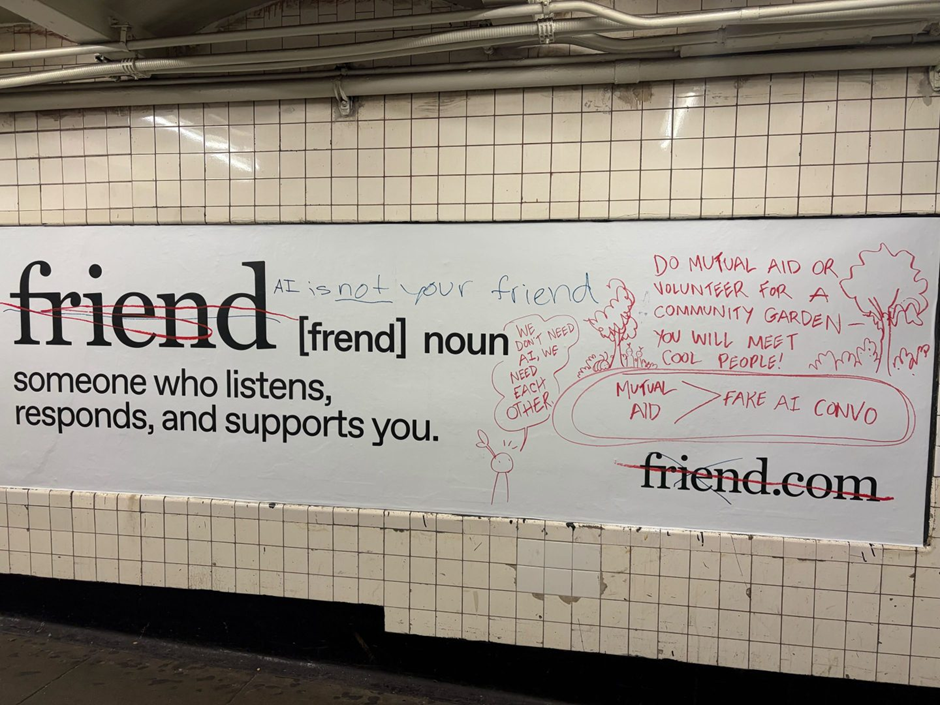

Images of the protest graffiti from the NYC subways via Fortune

AI companions is a fast growing trend looking to address the growing loneliness we see in an increasingly digital and fragmented world. It is pushed towards us by companies looking to profit off these struggles and off of those most vulnerable in our communities. But AI can never replace real relationships, it cannot feel and cannot fight it cannot love it can only mimic.

The company behind the newest AI wearable ‘friend’ made a bold marketing move – plastering the NYC subway with the biggest print campaign ever. The billboards showcased the pendant utilising a minimalistic design with lot of negative space and simple phrases such as the definition of a friend. These received a huge amount of backlash with protests in the form of graffiti across almost every advertisement. The graffiti included statements such as “AI would not care if you lived or died” and “AI will promote suicide when prompted it is not your “friend””, often in bold red lettering. It made as clear a statement as the ad campaign – New Yorkers weren’t falling for this AI wearables claims and neither should you. These protests call back to a distain not only for this company but for a rising theme in AI companies – creating companions and replacements for human emotional support systems. These include a rise in AI chatbot girlfriends, a trend in using ChatGPT as more of a companion less of a database and other AI wearables on the market. However, this is really the largest marketing campaign to hit the masses. Friend has done this before with the Superbowl campaign receiving backlash for being creepy and dystopian despite clearly attempting to show of the faux championship available with the device. The advertisement only served to highlight that the people using the device in the advertisement were isolated and became more so with the use of the device.

The Friend AI pendant via Wired

The company Friend AI claims to create an AI wearable device that’s always listening designed to promote companionship and emotional support through both unprompted commentary and prompted conversations. The company claims the device can form its own internal thoughts from listening into your daily life. The device is ‘always listening’ so long as it has a Bluetooth connection and stable Wi-Fi. It feels as if it was yesterday, we were all so worried about surveillance from big tech big hey at least they aren’t trying to hide it anymore. The companies CEO and founder Avi Schiffmann gained notoriety for creating a website for tracking COVID 19. Seems like a very noble cause to have moved away from – although he doesn’t seem to think so. He claims he’s trying to combat loneliness which he often feels “I feel like I have a closer relationship with this […] pendant around my neck than I do with these literal friends in front of me,” Schiffmann told Wired. Wow. That’s an extremely dystopian statement. Maybe someone who is clearly very smart and talented in technology innovation could focus his time on creating spaces for genuine human connection to solve such loneliness rather than adding a soleless computer into the mix?

Now the CEO claims to have been meaning to start this sort of conversation that arose from the graffiti and protests but when you look at all his previous marketing, statements and philosophy it is clear that he is pro-AI and does see a problem with it replacing companionship and community.

Another problematic foray into the AI companionship business model is the concept of AI girlfriends or companions. Not only do these take over a necessary human relationship form and cause more harm then good by continuing the cycle on loneliness and isolation in already vulnerable users, but there’s been a terrifying theme of increased psychosis and delusions. This is not new to the world on the internet and media as a whole, more vulnerable people in particularly have incorporated technology and media into their psychosis or delusions as long as they have existed. However the introduction of AI has caused an exacerbation of this through its active encouragement of delusions due to LLMs trend in agreeing with users.

Engaging with other commentary or experimentation on this topic feels terrifyingly dystopian. A video made by popular youtuber Eddy Burback follows his attempt to engage in delusions with ChatGPT and it is far too easy. This sane man who advocates against AI usage falls down a rabbit hole while being encouraged by AI to show how easily this could occur with an unstable or vulnerable induvial. I came out of my experience watching that video truly terrified of not only what this could cause in people with existing conditions, but those in a more vulnerable time in their lives.

Big companies are already buying into this trend with X and Elon Musk creating an AI chat bot in built into the platform which appears in the form of a young anime style girl. I’m sure everyone can see how an AI that looks like a young already sexualised woman that is naturally agreeable due to its algorithm would be extremely problematic and feed into wider issues we are facing as a society. The cherry on top here is that it has been reported that this AI has been trained of the data of twitter employees, at the threat of losing their jobs.

Elon Musks AI ‘Ani’

Often media and artists are those who cast a vision to possibilities of where new tech may lead with horrifying results. As explored by Alisha Hiscox in her article on the movie Companion, AI and new tech which feeds into this derogatory view of woman opens up a pipeline of abuse and sinister behaviour for those looking to exploit. Many may not see the harm as these are not ‘real women’ but how do we stop this bleeding into humanity? Not only effecting those who may be vulnerable to abuse but perpetuating and encouraging abusers to view women as agreeable, slave like creatures to cater to their ever need, rather than equal partners with their own thoughts and opinions.

Both the AI wearables and companions show a worrying trend in the fall of the importance of real relationships, particularly to those feeling vulnerable. We know how important a support system is to all humans, particularly when they are struggling, and these technologies cannot replace this. At best its momentary satisfaction, at worst its exacerbating unsafe delusions and psychosis.

We should take a moment and notice why these forms of technology are being pushed towards us. AI had some extremely valuable use cases when initially created such as augmenting data analysis, repetitive tasks and even pattern finding in medical and biological research. But these areas didn’t make money off the vast majority of people, there was a faster way for big companies to access the wider population with this technology while it is unmoderated and relatively untested. The rise of chatbots in everyday life, to augment thinking and relationships has been a purposeful move to ensure it is engrained in every facet of our lives. Humans are incredible easy to manipulate when focused on their emotions and that is exactly what these AI companies are betting on.

Post COVID-19 and even post the dot.com boom there has been a series lack of community leading to a feeling of isolation and loneliness for many. COVID highlighted this feeling and has given companies a chance to find ways to profit off this deep universal pain. But technology and AI specifically cannot replace human connection. This could be debated back and forth but a friendship is not just someone who gives you advice and jokes around. A friendship is give and take, its mistakes, its hard conversations. Friendship and relationships teach so much to all involved about giving and receiving, boundaries and shapes each person for the better, no matter the outcome of the relationship. AI cannot possibly facilitate this. Its mistakes are not made from emotional haste it’s made from misinformation or an error in an algorithm. As a friend it does not ask anything from you and hence doesn’t teach support, boundaries, or anything close to real love and affection. The true loneliness every human has been experiencing more frequently in the last 20 years cannot be solved by a soulless necklace, it can only be solved through humans talking to each other with empathy, being willing to make mistakes and grow through love and compassion.

AI cannot feel, it’s an emotional mimic.

- Fast Fashion: When Speed turns Style into WasteYou’re scrolling through your phone late at night when a new dress appears on your screen. It costs less than dinner, it’s out for next-day delivery, and it looks just like the ones you’ve been seeing everyone wear on TikTok and Instagram. Click! And it’s on its way. By the weekend, it may be hanging… Read more: Fast Fashion: When Speed turns Style into Waste

- Do K-pop idols have the right to date?The love affairs of Korean idols have always been of particular concern in recent years. The exposure of their romance is usually different from that of other celebrities or Western singers. Usually, they rarely receive blessings. Last month, Jung Kook from BTS and the group aespa’s member Winter revealed that they are in a relationship, but neither… Read more: Do K-pop idols have the right to date?

- Is fun still the point? Players lament the recent state of the video game industrySource: https://fintechnews.sg/95808/studies/the-rise-of-gaming-industry-segment-that-cannot-be-ignored The current video game industry, despite quality improvements thanks to technological advancements, has had a downhill trend, with constant unnecessary price hikes and microtransactions and forced inclusivity in video games at the expense of its quality. The year 2025 has seen a worrying trend where it is reported approximately 11% of developers were… Read more: Is fun still the point? Players lament the recent state of the video game industry

- How “imaginary friendships” with live-streamers worsen lonelinessIntroduction Every night, millions of young adults watch streamers on platforms like Twitch, YouTube Live, Douyu, and Huya. According to industry reports, Twitch alone sees over 21 billion hours watched annually, with users averaging 95-106 minutes daily. These viewers include gamers, students and remote workers who form deep emotional bonds with streamers they have never… Read more: How “imaginary friendships” with live-streamers worsen loneliness

- From long to short videos – The risks of fragmented attention in the digital ageImage source:Everyone is playing with their cell phones and swiping short videos – Search Images